The data warehouse concept emerged for strategic decision making at the executive management level business, need to analyze and understand the trend for forecasting about future and to lead the business in right direction. For this they need the data collected over years in the past by way of many transactions for customers.

These piles of historical data are sitting in the operational or transactional databases or file systems of many organizations. These systems process day-to-day transaction and store the data in normalized database named OLTP system.

But this is not much useful for the business community as it is for strategic decision making. These data might be scattered across many different geographical locations, over long duration generated by historically different systems.

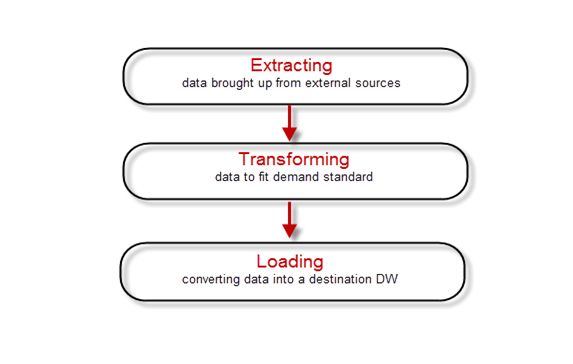

ETL process extracts these heterogeneous data from different sources, validates, cleans using some business rules and transforms to homogeneous data and load it into the target database i.e data warehouse. These data is de-normalized for easy analytical processing and reporting.

As a tester, we need to validate the data flow between source to target by proving that all the source data are transformed as per the business rule and available in target database accurately and completely without any data loss or duplication.

Purpose of ETL Testing

- To verify the correctness of data transformation against the signed off business requirements and rules.

- To verify that expected data is loaded into data mart or data warehouse without loss of any data.

- To validate the accuracy of reconciliation reports.

- To make sure complete process meet performance and scalability requirements

- Data security is also sometimes part of ETL testing

- To evaluate the reporting efficiency

Types of ETL Testing

Reconciliation testing:

In this type of testing, matching of count of records is checked. Although this is not the best way, but in case of lack of time, it helps. Reconciliation Testing / Source to Target count testing

List of SQL queries to find out the values:

To find out values from Source Table: select count (*) from source table where (conditions)

To find out values from Target Table: select count (*) from target table where (conditions)

Expected Output: – Source count = Target Count

Constraint testing:

Here locates data from source to target and identify whether the data is mapped or not. Following are the key checks: UNIQUE, NULL, NOT NULL, Primary Key, Foreign key, DEFAULT, CHECK

Validation testing (source to target data):

It is generally executed in critical or financial projects. This type of testing validates each data point and match source to target data.

Testing for duplicate check:

It is ensure that there are no duplicate values for unique columns. Duplicate data can arise due to any reason like missing primary key etc.

Below is one example: Testing for Duplicate Check

select emp_id,emp_name,emp_sal count(*) from employee group by emp_id,emp_name,emp_sal having count(*)>1

Testing for attribute check:

To check whether all attributes of source system are present in target table.

Logical or transformation testing:

To test any logical gaps should depending upon the scenarios, following methods can be used: boundary value analysis, equivalence partitioning, comparison testing, error guessing or sometimes, graph based testing methods. It also covers testing for look-up conditions.

Incremental and historical data testing:

Test to check the data integrity of old & new data with the addition of new data. It also covers the validation of purging policy related scenarios.

GUI / navigation testing:

To check the navigation or GUI aspects of the front end reports.

Checklist for ETL testing In Data Integration

- Check for the Connection & Availability of the Testing Schema & all the target tables that will be involved in testing.

- Check for the Naming Conventions (e.g.) Check whether the names specified in the mapping document matches with that of the Target Tables created in the Testing Schema.

- Check for the Data Types/Size of the Columns that are available in the Target table to avoid unnecessary rejections due to size constraints

- Check whether all the Lookup tables are loaded with appropriate data.

- Try to gain insight of the real-time scenario’s that the project deals with.

- Check for the record counts

(e.g.) Source File contains – > 100 records, Out of which 80 was moved to staging table using Business filters. Once the development team loads the data in the target table. Tester has to consider 3 scenarios:

If the target tables have 80 records, it means no records are rejected

If the target table have say 60 records, then 20 records should have been rejected and should be available in reject file/Error Table

If the target tables have say 85 records, which is fundamentally incorrect. - Validate each & every mapping rule specified in the mapping document, by writing an SQL querying the form (Staging table + Mapping Rule) – Target table = No Rows returned. If rows returned, analyze them & find whether the reason for rejection is appropriate.

- Check for the Referential Integrity Constraints, by segregation all the Tables with Primary/Foreign Key constraints & write queries for RI validation.

- Check for duplicates of Primary Key Columns

- Validate the Null & Non-null Columns

- Validate the realtor tables that help in maintaining the historical data

- Give due importance to the following conditions:

Certain records might get rejected even before loading the data into the staging table.

Certain records might get rejected after loading into the staging but before moving to the target.

Conclusion

ETL testing will help to ensure the best work possible is being done and will confirm the correctness of data being transformed. This is measured against the rules and requirements of the company itself. It also verifies that the transformed data can loaded without any loss of information, is used to validate the accuracy of reports, ensures that the process meets performance requirements, and finally, is used to evaluate the entire reporting structure.